Business

Took responsible approach to train our AI models: Ap

San Francisco, July 30

Tech giant Apple has responded to certain allegations regarding its AI models, saying it takes precautions at every stage — including design, model training, feature development, and quality evaluation — to identify how its AI tools may be misused or lead to potential harm.

The company said in a technical paper that it will continuously and proactively “improve our AI tools with the help of user feedback”.

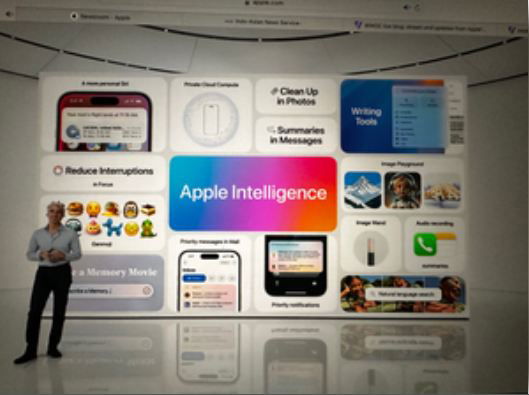

It last month revealed Apple Intelligence that will offer several generative AI features in iOS, macOS and iPadOS software over the next few months.

“The pre-training data set consists of… data we have licensed from publishers, curated publicly available or open-sourced datasets and publicly available information crawled by our web crawler, Applebot,” Apple wrote.

Given our focus on protecting user privacy, we note that no private Apple user data is included in the data mixture, the company added.

According to the technical paper, training data for the Apple Foundation Models (AFM) was sourced in a “responsible” way.

Apple Intelligence is designed with the company’s core values at every step and built on a foundation of industry-lead privacy protection.

“Additionally, we have created Responsible AI principles to guide how we develop AI tools, as well as the models that underpin them,” said the iPhone maker.

The company further said that no private Apple user data is included in the data mixture.

“Additionally, extensive efforts have been made to exclude profanity, unsafe material, and personally identifiable information from publicly available data. Rigorous decontamination is also performed against many common evaluation benchmarks,” Apple elaborated.

To train its AI models, the company crawl publicly available information using its web crawler, Applebot and “respect the rights of web publishers to opt out of Applebot using standard robots.txt directives”.

“We take steps to exclude pages containing profanity and apply filters to remove certain categories of personally identifiable information (PII). The remaining documents are then processed by a pipeline which performs quality filtering and plain-text extraction,” Apple emphasised.